Nent

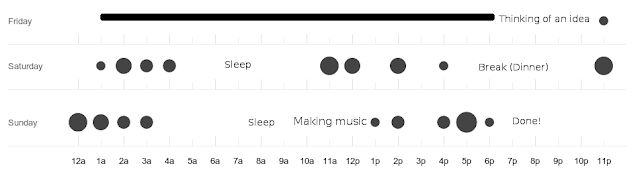

Nent is a asteroids-esque game, with upgrades and meta-ball fluid effects. It is also my submission to Ludum Dare 28, a 48hr game jam competition for which the theme was 'You only get one'. This is my first time participating in #LD48, and it has been quite the experience. To get a sense of how it felt, here is my GitHub punch-card showing my commits over the 48 hour period:

The most difficult part of creating the game was not coding, but actually coming up with a decent idea in the first place. The theme ('You only get one') is pretty vague and open to interpretation, and there were many ways to go about it (which makes coming up with an idea that much harder). In the end, after thinking about it for over 2 hours, I finally decided to just make something that looked decent and worry about mechanics and theme later.

Before I get into some of the interesting technical bits, I want to go over the tools that I used for creating this game. Instead of my normal web IDE, I opted to use LightTable which greatly increased coding productivity through its JavaScript injection magic. For image editing (the power-ups) I used GIMP which is my main tool for any sort of image manipulation/creation. And lastly I used LMMS (Linux MultiMedia Studio), a free music composition tool, to create the music for the game. This was my first real attempt at creating digital music and I found it both enlightening and horribly difficult. For anyone looking to try out LMMS I recommend this video tutorial to get started.

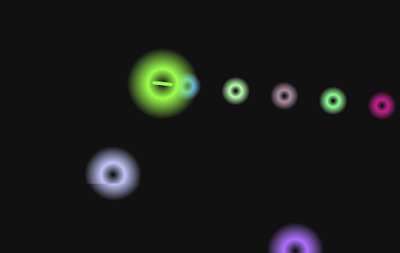

Now I'm going to go ahead and dive right into the most interesting aspect of the game, the liquid effects between object collision. This is done using the same technique as for metaballs. Metaballs (demo) work by combining gradients and then sampling the result. For example, here is what the game looks like before processing:

Ignoring the background color for a second, the gradients are created by changing the alpha values (opacity) of the color as they spread out. This is done using HTML5 canvas gradients:

var grad = ctx.createRadialGradient(x, y, 1, x, y, size)

grad.addColorStop(0, color.alpha(0).rgbaString())

grad.addColorStop(.4, color.alpha(1).rgbaString())

grad.addColorStop(1, color.alpha(0).rgbaString())

canvasContext.fillStyle = grad

Now, we iterate over every pixel and determine if the alpha value for that pixel is above a 'threshold'. Remember that when we have overlapping gradients, their alpha values will sum. This gives the following effect:

However, what we soon notice is that the CPU is getting pegged at 100% (1 core). This is because as our canvas gets larger, our iteration is taking exponentially longer. Here is the original code (used in the demo):

var imageData = ctx.getImageData(0,0,width,height),

pix = imageData.data;

for (var i = 0, n = pix.length; i <n; i += 4) {

if(pix[i+3]<threshold){

pix[i+3]/=6;

if(pix[i+3]>threshold/4){

pix[i+3]=0;

}

}

}

ctx.putImageData(imageData, 0, 0);

As pix.length increases, it takes much longer to go through the loop. This eventually reaches the point where we will not get this computation in under 16ms (required to get 60FPS). Luckily, I came up with a solution. If you remember my slideshow project, where I animated 1.75 million particles in real-time, I was able to leverage the GPU shaders to greatly improve rendering performance. I am going to do the same here, using a library called Glsl.js (https://github.com/gre/glsl.js). This library greatly simplifies the process of using GLSL (opengl shading language) shaders, and applying them to the canvas that I am already using (no need to re-write code in WebGL).

GAME.glsl = Glsl({

canvas: GAME.outCanv,

fragment: $('#fragment').text(),

variables: {

canv: GAME.canv

},

update: function(time, delta) {

animate(time)

this.sync('canv')

}

})

And now the shader code, which replaces the 'for' loop over the pixels:

<script id="fragment" type="x-shader/x-fragment">

precision mediump float;

uniform vec2 resolution;

uniform sampler2D canv;

void main (void) {

vec2 p = gl_FragCoord.xy / resolution.xy;

vec4 col = texture2D(canv, p);

if(col.a < 0.85) {

col.a /= 4.0;

if(col.a > threshold/4.0) {

col.a = 0.0;

}

}

gl_FragColor = col;

}

</script>

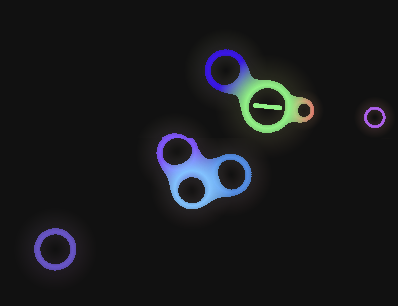

Now let's see what it looks like:

Oh no, that doesn't look right. Something odd is going on here. I'll go ahead and skip my adventure into blender land, and get right into the solution. The canvas, by default, uses composite blending. This means that it will absorb any colors underneath the canvas as part of the final canvas colors. Our web page has a background, with alpha 1.0, which causes every pixel to register in our metaball filter. To avoid this, we must modify the Glsl.js library, and add the following piece of code to change the default blending behavior (line 602, in the load() function):

gl.blendFunc(gl.SRC_ALPHA, gl.ONE_MINUS_SRC_ALPHA);

gl.enable(gl.BLEND);

And that's it! Now our game will render properly. In addition to utilizing shaders, this game also uses fixed-interval time-step physics, which you can read more about in my post on The Pond.

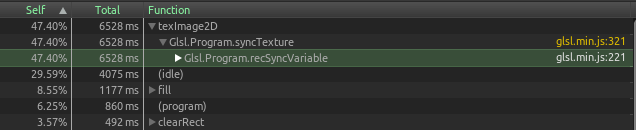

Looking to optimize the game more (for mobile devices for example), there is still a pretty large bottleneck regarding the canvas-to-webgl texture conversion each frame.

This could be solved by moving the entire game drawing process to the GPU shaders, and forgoing the canvas all together. However the task is non-trivial, and I did not have enough time during the 48hrs to be able to attempt it.

Participating in Ludum Dare was awesome, and I look forward to doing it again soon.